Archives

-

Rename JavaScriptConvert?

I am considering renaming the JavaScriptConvert class to JsonConvert.

I am considering renaming the JavaScriptConvert class to JsonConvert.When Json.NET was first released it contained a number of data classes that were prefixed with JavaScript... JavaScriptObject, JavaScriptArray, JavaScriptConstructor. JavaScriptConvert was used to convert the data objects from text and back again. In 2.0 these classes were removed and replaced by the LINQ to JSON API: JObject, JArray, etc, but JavaScriptConvert remained.

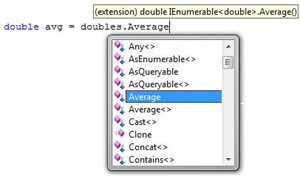

The current state of the nation is that just about every Json.NET class is prefixed with Json (JsonReader, JsonWriter, JsonSerializer) except JavaScriptConvert, a situation which I think is somewhat confusing and gets in the way of learning and discovering the Json.NET API through intellisense.

The downside of renaming JavaScriptConvert is that existing code that uses it will need to be updated when moving to a new version of Json.NET. A pain but nothing that Find and Replace couldn’t fix in 5 minutes. A namespace alias would also fix existing code.

using JavaScriptConvert = Newtonsoft.Json.JsonConvert;

Thoughts? Objections?

-

Build Server Rules

Breaking the build server is Serious Business. Fortunately there are a set of rules put together by a group of wise and thoughtful men to encourage good build server practice. And beer.

Build Server Rules

- The penalty for breaking the build is a beer.

- A broken build beer can only be written up while the build is broken.

- Once the build is broken, broken build beers will not be given until the build is fixed. It is considered bad form for other users to check in while the build is broken.

- Broken builds resulting from environmental factors on the build server such as locked files or other transient behavior shall not be penalized.

- Amnesty can be requested for tasks that can only be tested on the build server.

- Amnesty must be requested in advance.

- Amnesty requires the agreement of the build server council.

- While amnesty is in effect anyone can check into the build server with impunity.

- Amnesty ends once the task is complete and the build server is green.

- Build beers must be cashed in upon a contributor reaching 12 broken builds. Multiples of 6 may be purchased.

- Disputes are judged by a majority ruling of the build server council. Decisions and lulz are final.

Like developers must learn to honor the build server, testers must also be kept on their toes for that rare occasion when your code contains a bug.

QA Rules

- Each undiscovered bug in a release, judged as one that should have been found by the council, is a beer.

- The penalty for a bug found existing in multiple releases is a QA beer per release.

-

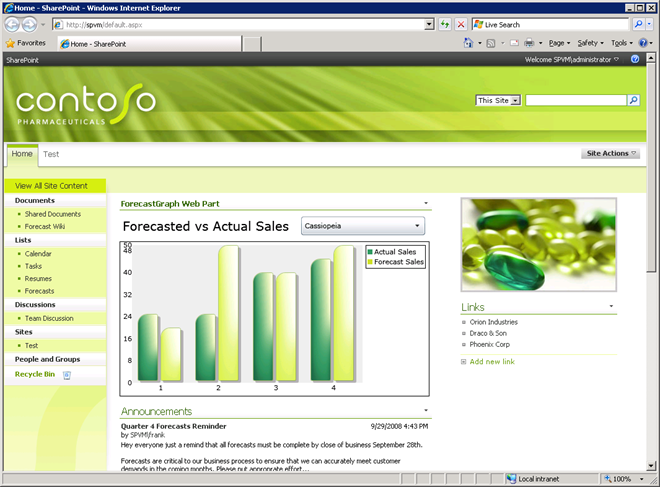

PDC 2008: Creating SharePoint Applications with Visual Studio 2008

The rip in the fabric of space time between our universe and the bizzaro universe, where I am a SharePoint expert, continues to grow. First I presented a 400 level SharePoint session at TechEd and now I have helped put together a SharePoint presentation for PDC!

“Creating SharePoint Applications with Visual Studio 2008” presented by Chris Johnson walks through creating a SharePoint application using the Visual Studio 2008 extensions for SharePoint (VSeWSS). A bunch of people from Intergen contributed to the end product. I helped write the overall script with Mark Orange, one of Intergen’s actual SharePoint experts, and I wrote the application used in the presentation.

The application featured a new project announced at PDC: the Silverlight Control Toolkit. SharePoint hosted a Silverlight chart control that visualized data called from a WCF web service and SharePoint’s web services.

PDC Fireworks

The Wellington .NET usergroup had a special event last week covering what was announced at PDC. I gave a brief 15 minute introduction to using the Silverlight chart control. You can get the slides from my talk (all 6 of them!) here.

-

Thoughts on C# 4.0 and .NET 4.0

C# 4.0 Dynamic Lookup

C# 4.0 Dynamic LookupI really like the way the C# team tackled bring dynamic programming to the language. C# is a static language but it is good to see that the C# team is pragmatic enough to realise that will always be situations where you don’t have type information at compile time.

With C# 4.0 rather than having to bust out ugly System.Reflection operations to interact with unknown objects, the new dynamic lookup hides any ugliness away from the developer. Statically typing an object as dynamic is a great solution as it lets you treat a specific object, which you don’t have type knowledge of, as you want with regular property and method calls while still keeping static typing throughout the rest of your application. I think this is a much better solution than the dynamic block discussed in the past.

Dynamic lookup also opens up some new interesting paradigms by offering the IDynamicObject interface to developers. The first thing I thought of when I saw it was Dynamic LINQ to JSON. I’m sure uses will come out of it that no one ever considered [:)]

C# 4.0 Covariance and Contravariance

Very happy to see this feature. I bumped my head into generic variance issues a number of times when writing LINQ to JSON. No longer having to worry about IEnumerable<JToken> not being compatible with IEnumerable<object> is great. They even kept it type safe!

C# 4.0 Named and optional parameters

Is it just me or did Anders wince when announcing named and optional parameter support in C# 4.0? Microsoft has said that they plan to keep the C#/VB.NET languages in sync and I wonder if this is C# inheriting VB.NET features by default.

.NET 4.0 Code Contracts

From everything I have seen of it so far I love the Code Contracts feature. Statically defining type information has been around forever and is A Good Thing. Its about time that we are able to statically define valid values as well.

.NET 4.0 Parallel Extensions

It is interesting to see parallel programming come as a library addition in the form of Parallel Extensions rather than a language feature (ditto for code contracts). I guess with a library you only need to create it once rather than having to figure out new concurrent programming syntax for every .NET language.

Bring on VS2010.

-

Json.NET 3.5 Beta 1 – Big performance improvements, Compact Framework support and more

I am starting to get organised towards releasing Json.NET 3.5. There are a lot of good new features coming in this version, almost all of them driven by user requests.

Compact Framework Support

Json.NET 3.5 includes a build for the Compact Framework 3.5. It supports all the major features of Json.NET.

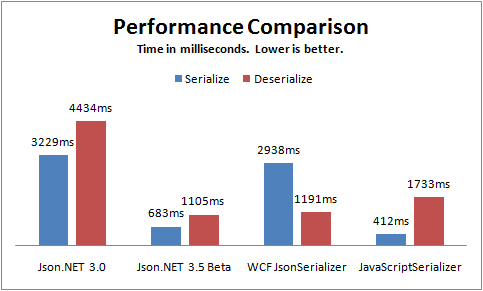

Performance Improvements

A lot of features have been added to Json.NET over the past couple of years and it has been a long time since I had done any performance tuning.

I busted out a profiler and found some big gains in the JsonSerializer by doing some basic caching of type data. I managed to eek out a 400% increase in performance. Not bad for 20 minutes work.

Below is a comparison of Json.NET 3.0, Json.NET 3.5 Beta 1, the WCF DataContractJsonSerializer and the JavaScriptSerializer. The code for the results is in the PerformanceTests class and is over 5000 iterations.

JsonSerializerSettings

There are a lot of settings on JsonSerializer but only a few of them are exposed through the JavaScriptConvert.SerializeObject/DeserializeObject helper methods. To make those options available through the easy to use helper methods I have added the JsonSerializerSettings class along with overloads using it to the helper methods.

public class Invoice

{public string Company { get; set; }

public decimal Amount { get; set; }

[DefaultValue(false)]

public bool Paid { get; set; }

[DefaultValue(null)]

public DateTime? PaidDate { get; set; }

}

This example of the JsonSerializerSettings also shows another new feature of Json.NET 3.5: DefaultValueHandling. If the property value is the same as the default value then those values will be skipped when writing JSON.

public void SerializeInvoice()

{Invoice invoice = new Invoice

{Company = "Acme Ltd.",Amount = 50.0m,

Paid = false};

string json = JavaScriptConvert.SerializeObject(invoice);

Console.WriteLine(json);// {"Company":"Acme Ltd.","Amount":50.0,"Paid":false,"PaidDate":null}json = JavaScriptConvert.SerializeObject(invoice,Formatting.None,new JsonSerializerSettings { DefaultValueHandling = DefaultValueHandling.Ignore });

Console.WriteLine(json);// {"Company":"Acme Ltd.","Amount":50.0}}

Writing Raw JSON

For a long time JsonWriter has had a WriteRaw method. I can proudly say that now it actually works. Serializing raw JSON is also much improved with the new JsonRaw object.

Changes

Here is a complete list of what has changed.

- New feature - Compact framework support

- New feature - Big serializer performance improvements through caching of static type data

- New feature - Added DefaultValueHandling option to JsonSerializer

- New feature - JsonSerializer better supports deserializing into ICollection<T> objects

- New feature - Added JsonSerializerSettings class along with overloads to JavaScriptConvert serialize/deserialize methods

- New feature - IsoDateTimeConverter and JavaScriptDateTimeConverter now support nullable DateTimes

- New feature - Added JsonWriter.WriteValue overloads for nullable types

- New feature - Newtonsoft.Json.dll is now signed

- New feature - Much better support for reading, writing and serializing raw JSON

- New feature - Added JsonWriter.WriteRawValue

- Change - Renamed Identifier to JsonRaw

- Change - JSON date constructors deserialize to a date

- Fix - JavaScriptConvert.DeserializeObject checks for addition content after deserializing an object

- Fix - Changed JsonSerializer.Deserialize to take a TextReader instead of a StringReader

- Fix - Changed JsonTextWriter.WriteValue(string) to write null instead of an empty string for a null value

- Fix - JsonWriter.WriteValue(object) no longer errors on a null value

- Fix - Corrected JContainer child ordering when adding multi values

Links

Json.NET 3.5 Beta 1 Download – Json.NET source code, documentation and binaries

-

Speeding up ASP.NET with the Runtime Page Optimizer

Earlier this year I worked on an exciting product called the Runtime Page Optimizer (RPO). Originally written by the guys at ActionThis to solve their website’s performance issues, I had the opportunity to develop it with them and help turn the RPO into a product that works on any ASP.NET website.

How RPO works

At its heart the RPO is a HttpModule that intercepts page content at runtime, inspects it, and then rewrites the page so that is optimized to be downloaded to the client. Because the RPO is a module it can quickly be added to any existing ASP.NET website, creating instant performance improvements.

What RPO optimizes

The RPO does three things to speed up a web page:

The RPO does three things to speed up a web page:- Reduces HTTP requests Reducing the number of resources on a page often produces the most dramatic improvement in performance. A modest sized web page can still take a significant amount of time to load if it contains many stylesheets, scripts and image files. The reason for this is most of the time spent waiting for a web page to load is not for large files to download but from HTTP requests bouncing between the browser and the server. The RPO fixes this by intelligently combining CSS and JavaScript text files together and merging images through CSS spriting. Fewer resources means less time wasted from HTTP requests and faster page loads.

- Compresses Content RPO minifies (whitespace removal) and zip compresses JavaScript and CSS files, as well as the ASP.NET page itself. Compression reduces page size, saves bandwidth and further decreasing page load times.

- Caching The RPO ensures all static content has the correct HTTP headers to be cached on a user’s browser. This further decreases "warm" page load times and saves even more bandwidth. When resources change on the server, the client-side cache is automatically refreshed.

Other stuff

Other stuff- Every site is different so the RPO has extensive configuration options to customize it to best optimize your ASP.NET application. RPO has been used on ASP.NET AJAX, MVC, SharePoint, CRM, EpiServer and DotNetNuke websites.

- Lots of thought has been put into performance. The RPO caches all combined content and supports load balancing. Once combined content has been cached the only thing that happens at runtime is lightweight parsing of HTML, which is darn quick. I know because I wrote it that way [:)]

- The RPO supports all browsers, including new kid on the block: Chrome. RPO is even smart enough to take advantage of features only available in certain browsers and supply specially customized content to further improve page load times.

- The industrious people at RPO are making a version for Apache.

Working on the RPO was a great experience. Some very smart people are behind it and everyone contributed something unique to make RPO the awesome tool that it is today.

RPO is out now and a fully featured trial is available at getrpo.com. Check it out!

Update:

I noticed there is a question about the RPO on StackOverflow (I've been using StackOverflow a lot lately, excellent resource). The question discussion has some positive comments from users who have tried the RPO out.

-

ASP.NET MVC and Json.NET

This is an ActionResult I wrote to return JSON from ASP.NET MVC to the browser using Json.NET.

The benefit of using JsonNetResult over the built in JsonResult is you get a better serializer (IMO [:)]) and all the other benefits of Json.NET like nicely formatted JSON text.

public class JsonNetResult : ActionResult

{public Encoding ContentEncoding { get; set; }

public string ContentType { get; set; }

public object Data { get; set; }

public JsonSerializerSettings SerializerSettings { get; set; }

public Formatting Formatting { get; set; }

public JsonNetResult(){SerializerSettings = new JsonSerializerSettings();

}

public override void ExecuteResult(ControllerContext context)

{if (context == null)

throw new ArgumentNullException("context");

HttpResponseBase response = context.HttpContext.Response;response.ContentType = !string.IsNullOrEmpty(ContentType)? ContentType

: "application/json";if (ContentEncoding != null)

response.ContentEncoding = ContentEncoding;

if (Data != null)

{JsonTextWriter writer = new JsonTextWriter(response.Output) { Formatting = Formatting };

JsonSerializer serializer = JsonSerializer.Create(SerializerSettings);

serializer.Serialize(writer, Data);

writer.Flush();

}

}

}

Using JsonNetResult within your application is pretty simple. The example below serializes the NumberFormatInfo settings for the .NET invariant culture.

public ActionResult GetNumberFormatting()

{JsonNetResult jsonNetResult = new JsonNetResult();

jsonNetResult.Formatting = Formatting.Indented;jsonNetResult.Data = CultureInfo.InvariantCulture.NumberFormat;return jsonNetResult;}

And here is the nicely formatted result.

{"CurrencyDecimalDigits": 2,

"CurrencyDecimalSeparator": ".",

"IsReadOnly": true,

"CurrencyGroupSizes": [

3

],

"NumberGroupSizes": [

3

],

"PercentGroupSizes": [

3

],

"CurrencyGroupSeparator": ",",

"CurrencySymbol": "¤",

"NaNSymbol": "NaN",

"CurrencyNegativePattern": 0,

"NumberNegativePattern": 1,

"PercentPositivePattern": 0,

"PercentNegativePattern": 0,

"NegativeInfinitySymbol": "-Infinity",

"NegativeSign": "-",

"NumberDecimalDigits": 2,

"NumberDecimalSeparator": ".",

"NumberGroupSeparator": ",",

"CurrencyPositivePattern": 0,

"PositiveInfinitySymbol": "Infinity",

"PositiveSign": "+",

"PercentDecimalDigits": 2,

"PercentDecimalSeparator": ".",

"PercentGroupSeparator": ",",

"PercentSymbol": "%",

"PerMilleSymbol": "‰",

"NativeDigits": [

"0",

"1",

"2",

"3",

"4",

"5",

"6",

"7",

"8",

"9"

],

"DigitSubstitution": 1

}

-

The Giant Pool of Money

A pretty amazing reshaping of the landscape of Wall Street occurred during the weekend. Major finance bank Merrill Lynch was forced to sell itself for a cool $50 billion and another, Lehman Brothers, filed for bankruptcy. Both have lost huge amounts of money from bad debts. They lent money to people who never had the means to pay it back.

A pretty amazing reshaping of the landscape of Wall Street occurred during the weekend. Major finance bank Merrill Lynch was forced to sell itself for a cool $50 billion and another, Lehman Brothers, filed for bankruptcy. Both have lost huge amounts of money from bad debts. They lent money to people who never had the means to pay it back.This American Life has a great non-technical and entertaining podcast on how they (and we) got in this situation and why the banks did what they did.

A special program about the housing crisis produced in a special collaboration with NPR News. We explain it all to you. What does the housing crisis have to do with the turmoil on Wall Street? Why did banks make half-million dollar loans to people without jobs or income? And why is everyone talking so much about the 1930s? It all comes back to the Giant Pool of Money.

It is an hour long and well worth listening to if you want to have a better understanding of the "credit crunch".

-

DataSet/DataTable Serialization with Json.NET

Rick Strahl has written a great post on serializing ADO.NET objects (DataSet, DataTable, DataRow) to JSON using Json.NET.

Rick Strahl has written a great post on serializing ADO.NET objects (DataSet, DataTable, DataRow) to JSON using Json.NET. -

TechEd 2008 Summary

TechEd NZ 2008 is over! These are my thoughts and experiences of this year's TechEd.

Day 1

Keynote

The keynote started off with a surprise: Speakers from National and Labour were invited to present their broadband/IT policies. John Key presented for National and was evangelising there policy of fibre to the home across the nation. I was impressed by how down to earth his speaking styling was and he got great lulz with his pitch "A vote for National is a vote for high definition porn". David Cunliffe presented Labour's policy. He also spoke really well although after Key's laid back speech it felt much more political to me. Labour isn't investing as much in broadband as National and a lot of his time was spent spreading fear and uncertainty about how National will spend the money it has pledged.

The keynote started off with a surprise: Speakers from National and Labour were invited to present their broadband/IT policies. John Key presented for National and was evangelising there policy of fibre to the home across the nation. I was impressed by how down to earth his speaking styling was and he got great lulz with his pitch "A vote for National is a vote for high definition porn". David Cunliffe presented Labour's policy. He also spoke really well although after Key's laid back speech it felt much more political to me. Labour isn't investing as much in broadband as National and a lot of his time was spent spreading fear and uncertainty about how National will spend the money it has pledged.Once the politicians had finished pontificating (which was too long), Amit Mital, general manager of the Live Mesh platform got up to talk. His content was more practical compared to last years keynote by Lou Carbone but I didn't enjoy it nearly as much. Weirdly after talking about Live Mesh and hyping it up we never saw a demo of it in action.

Hands On Labs

I contributed to the Hands On Labs launcher this year and I went and had a look at the lab room early on day 1. I didn't mention work on it before TechEd started incase everything went horribly wrong but from everything I have heard the labs were a great success. It is now safe to associate myself with it [;)]

I contributed to the Hands On Labs launcher this year and I went and had a look at the lab room early on day 1. I didn't mention work on it before TechEd started incase everything went horribly wrong but from everything I have heard the labs were a great success. It is now safe to associate myself with it [;)]The most interesting and visible change I made is lab manuals no longer need to be printed out but instead are displayed on a second screen. The usability is better than the tradition paper manuals (copy and paste from the manuals ftw!) and it doesn't require a couple of trees worth of paper to print everything out 100 times.

After looking at a couple of alternatives I chose to convert the manual documents to the XPS format and displaying them using the WPF DocumentViewer. This approach ended up being really simple and provides a great user experience. Since it is WPF I was able to style up the DocumentViewer with Intergen colours and logos. When I told Scott Hanselman about what I had done with the hands on lab launcher this year he was somewhat shocked and said it was the first time he had heard of anyone actually using XPS's.

Ask The Experts

Finally a chance to legitimately wear a hat with the word "Expert" on it! Since my session was on the Office stream I was an Office expert which I thought was pretty funny.

Day 2

OFC404 - Open XML Development Turning SharePoint Data into Microsoft Office Documents: A Deep Dive into SharePoint Document Assembly Using Open XML

"We hope to provide you with as much value as there are words in this session's title" - Reed Shaffner

My session, co-presented with Reed Shaffner, went really well. I was nervous beforehand but the demos went without a hitch and I think the content was the sort of information that people went to the session looking for. There were a lot of questions which I think we covered well.

At the end of the presentation we offered a copy of the demo source code to anyone that wanted it, both as downloads on the TechEd website and copying it directly to a USB key right there and then which worked out well.

Hanging Out In The Speakers Room

I got asked to present at TechEd quite late this year and I ended up with unfinished work that needed doing at TechEd. Most of Tuesday after my presentation was spent working away in the Speakers Room.

I didn't get to see any sessions on Tuesday but hanging out with the speakers and event organisers was a great experience in itself. Last year I was a delegate to TechEd so it has been really cool this year to be able to interact with a lot of the presenters I saw in 2007.

A highlight was spending the afternoon sitting next to Scott Hanselman and then later meeting up and walking down to TechFest with him. Hanselminutes is my favourite technology podcast and the knowledge and passion Scott shows in his podcast for techie stuff transfers to real life. I also really enjoyed his dry sense of humour. I have never seen JD more excited than when he told me that him and Scott exchanged quotes from Family Guy.

TechFest

This year TechFest was held at Princess Wharf and spread across three bars. The music and ambiance was great.

This year TechFest was held at Princess Wharf and spread across three bars. The music and ambiance was great.Day 3

Packing Up

The flight back to Wellington was early this afternoon so I only had a half day at TechEd. Today I went and actually saw some sessions, checked out the market place and did various other TechEd-ish things before packing up and checking out.

Sidenote: I am pretty sure I bumped into Teller of Penn & Teller fame in the SkyCity Grand hotel lobby. I would have gone up to talk to him but hearing Teller speak, who is famous for not speaking, quite possibly would have caused my head to explode.

TechEd 2008 has been a blast. Bring on TechEd 2009!

-

TechEd 2008

Tomorrow I fly up to TechEd NZ 2008. Intergen is running the hands on labs again so expect to see groups of people in striking yellow camo pants.

As always Intergenites are also presenting a number of sessions:

- SOA205 - Extending the Application Platform with Cloud Services - Chris Auld

- SOA209 - The Road to "Oslo" : The Microsoft Services and Modeling Platform - Chris Auld

- OFC301 - Creating Content Centric Publishing Sites with MOSS 2007 - Mark Orange, Zac Smith

- OFC305 - Best Practice ECM with SharePoint 2007 - Michael Noel, Mark Orange

- OFC404 - Open XML Development Turning SharePoint Data into Microsoft Office Documents - James Newton-King, Reed Shaffner

Yup I am presenting at TechEd! I'm not sure which I am more of: excited or nervous. Good times.

-

Json.NET 3.0 Released

JSON's popularity continues to grow. In the few months since Json.NET 2.0 was released it has been downloaded over 5000 times and lots of new feedback has been posted in project's forum. This release of Json.NET adds heaps of new features, mostly from your suggestions, and fixes all known bugs!

JSON's popularity continues to grow. In the few months since Json.NET 2.0 was released it has been downloaded over 5000 times and lots of new feedback has been posted in project's forum. This release of Json.NET adds heaps of new features, mostly from your suggestions, and fixes all known bugs!Silverlight Support

Json.NET now supports running on the browser within Silverlight. The download includes Newtonsoft.Json.Silverlight.dll which can be referenced from Silverlight projects.

The Silverlight build the library shares the same source code as Json.NET and it is identical to use.

LINQ to JSON even easier to use

There have been lots of little changes to LINQ to JSON in this release but the main one in Json.NET 3.0 is that the two primary classes now implement common .NET collection interfaces.

JObject implements IDictionary<TKey, TValue> and JArray implements IList<T>. Working with these classes is now just like any other list or dictionary!

Serializer Improvements

Along with incorporating many small improvements, the big new addition to the Json.NET 3.0 serializer is JsonConverterAttribute.

JsonConverterAttribute can be placed on a class and defines which JsonConverter you always want to use to serialize this class with instead of using the default serializer logic.

JsonConverterAttribute can also be placed on a field or property to specify which converter you want to use to serialize that specific member.

public class Person

{// "John Smith"public string Name { get; set; }

// "2000-12-15T22:11:03"[JsonConverter(typeof(IsoDateTimeConverter))]

public DateTime BirthDate { get; set; }

// new Date(976918263055)[JsonConverter(typeof(JavaScriptDateTimeConverter))]

public DateTime LastModified { get; set; }

}

Changes

Here is a complete list of what has changed.

- New feature - Silverlight Json.NET build.

- New feature - JObject now implements IDictionary, JArray now implements IList.

- New feature - Added JsonConverterAttribute. Used to define a JsonConverter to serialize a class or member. Can be placed on classes or fields and properties.

- New feature - Added DateTimeFormat to IsoDateTimeConverter to customize date format.

- New feature - Added WriteValue(object) overload to JsonWriter.

- New feature - Added JTokenEqualityComparer.

- New feature - Added IJEnumerable. JEnumerable and JToken now both implement IJEnumerable.

- New feature - Added AsJEnumerable extension method.

- Change - JsonSerializer does a case insensitive compare when matching property names and constructor params.

- Change - JObject properties must have a unique name.

- Bug fix - Internal string buffer properly resizes when parsing long JSON documents.

- Bug fix - JsonWriter no longer errors in certain conditions when writing a JsonReader's position.

- Bug fix - JsonSerializer skips multi-token properties when putting together constructor values.

- Bug fix - Change IConvertible conversions to use ToXXXX methods rather than casting.

- Bug fix - GetFieldsAndProperties now properly returns a distinct list of member names for classes than have overriden abstract generic properties.

Donate

You can now support Json.NET by donating. Json.NET is a free open source project and is developed in personal time.

Links

Json.NET 3.0 Download - Json.NET source code and binaries

-

Intergen Wins 2008 Microsoft Partner Of The Year Award

Last night Intergen, my most excellent employer, won Microsoft Partner Of The Year Award for New Zealand.

Microsoft said we won the award because of our commitment to delivering solutions to our clients across the entire Microsoft Application Stack. Intergen was recognised as the only partner who can truly offer solutions that span the breadth from .NET development through MOSS, BI, CRM, NAV etc. Special mention was also made of the work we did in support of the Office Open XML standard.

Chris was on hand to accept the award. He was rather blasé about the whole thing...

-

I Rule at Chain Letters

With only a little proding *cough*Mr Law*cough* all the people I tagged for How I Got Started in Software Development made a post.

- Andrew Tokeley - How I Got Started in Software Development

- Gavin Barron - /dev/gav

- Brendan Law - The evolution of chain letters...

- Jo Chapman - Meme-ishness

- Chinnakonda "Chaks" Chakkaradeep - How I Got Started in Software Development

Good luck, wealth, love, health and fame are mine!

-

Kids Say the Darndest Things

Question:

Question:Can Json.net parse rfc-822 format?

Answer:

I'm not going to spend time testing this myself right now but I would guess that the odds of a JSON parser being able to successfully and correctly parse the RFC-822 email format is low.

*facepalm*

-

How I Got Started in Software Development

I have been memed.

I have been memed. How old were you when you started programming?

19.

How did you get started in programming?

Most of my experience with computers as a teenager was playing computer games. It wasn't until my second year of doing commerce at university that I did a programming paper and really got into it. Oddly I have never had the desire to write a computer game.

What was your first language?

Visual Basic 6.

What was the first real program you wrote?

Some lameo VB6/Access data entry form for university.

What languages have you used since you started programming?

Visual Basic, SQL, PL/SQL, JavaScript, C#, Python, Delphi.

What was your first professional programming gig?

Building a transaction management system for a small Internet startup.

If you knew then what you know now, would you have started programming?

Yes.

If there is one thing you learned along the way that you would tell new developers, what would it be?

The software development world is a huge place. Don't be afraid to spend a couple of years exploring and finding what you’re most passionate about.

What’s the most fun you’ve ever had… programming?

Solving challenging business and technology problems in a simple, maintainable way that hides all the complexity behind a easy to use interface. Json.NET and and the RuntimePageOptimizer are two projects that I have really enjoyed working on.

I choose

-

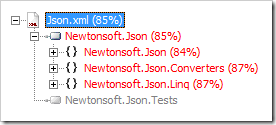

Unit Test Code Coverage - What Is A Good Number?

Shawn writes that you should have 100% test code coverage, Jason agrees. Tokes, a co-worker and the person who pointed these posts out to me, disagrees.

I also disagree.

Background

I'm a strong believer in unit testing. I have used unit testing in almost every project I have been involved in for a couple of years now and each time I find I get more and more value from using them.

My experience with code coverage tools is more limited. I first started looking at NCover and code coverage after coming across an NCover article written its creator. The article used Json.NET, my own open source project, as the example! (if that doesn't prompt you to look at something nothing will [:D])

Near the end of the development cycle of Json.NET 2.0 NCover was of huge help identifying which methods and sections of code didn't have any tests running over them. Without NCover many of the bugs I found from eventually unit testing that code would still be in Json.NET.

Coverage Percentage

While I think NCover is a great tool to identify what sections of code aren't being tested, the overall percentage mark that it gives is potentially dangerous.

Every software project has limited resources and spending your time chasing that number is a poor use of it. 100% coverage certainly doesn't mean that your program is bug free. Code that works with one input will break with another.

When it comes to unit testing I believe you are better off writing tests that focus on your use cases.

Obviously checking that the results are right is important but there is also making sure that your code behaves as expected when passed boundary values, performing inverse operations and cross checking results elsewhere in the application. These are all important things to test when creating robust code but aren't necessary to achieve 100% coverage.

A Good Number

So what is a good number? If you have written tests the cover the possible uses of your application given the time available (NCover is useful for double checking that you are testing everything you want to) and have then have verified that the results are correct, whatever number your code coverage is at that moment is in my opinion a good number.

Json.NET's was 85%

-

What Is Coming In Json.NET 2.1

I have just checked in two new features coming soon in Json.NET 2.1: Silverlight client support and improvements to the LINQ to JSON objects. If you are feeling cutting edge you can download the latest source code from CodePlex. Buyer beware: Code coverage of the changes is currently light.

I have just checked in two new features coming soon in Json.NET 2.1: Silverlight client support and improvements to the LINQ to JSON objects. If you are feeling cutting edge you can download the latest source code from CodePlex. Buyer beware: Code coverage of the changes is currently light.To compile a Silverlight build of Json.NET you will need the latest Silverlight 2.0 Beta 2 bits. There is also a unit test project in the solution for the Silverlight build. Read about how it works here (I really wish this was around when I was working on TextGlow).

The LINQ to JSON changes are minor but useful. JObject now implements IDictionary and JArray now implements IList. For those that are new to Json.NET these common interfaces should make working with LINQ to JSON objects much more familiar.

If you have any suggestions of what you would like to see in Json.NET 2.1 let me know.

-

I Don't Read Programming Books - What Am I Missing Out On?

Of Jeff Atwood's list of 16 recommended books for developers I have read zero. Ditto this list of Top 20 Programming Books. Searching though Amazon recommended programming book lists also yielded no hits.

Of Jeff Atwood's list of 16 recommended books for developers I have read zero. Ditto this list of Top 20 Programming Books. Searching though Amazon recommended programming book lists also yielded no hits.When learning a new software development technology or idea I have always turned to the web. Blogs, articles, open source projects and so on, leaving the books I read largely to fiction.

What am I missing out on? Are blogs and other online content enough or will reading The Pragmatic Programming (common to almost every list) make me a better software developer?

-

Important Json.NET 2.0 milestone

-

Display nicely formatted .NET source code on your blog

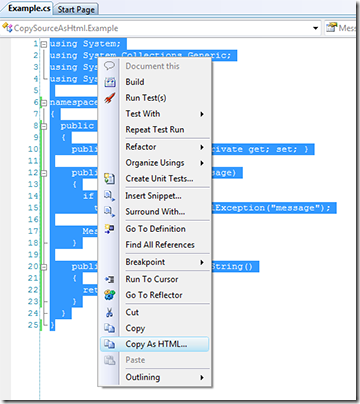

The best method of displaying nicely formatted code in a blog post in my experience is a Visual Studio plugin called CopySourceAsHtml.

Once the plugin is installed simply select the code you want to display and choose Copy As HTML. A clean HTML representation of your code will be copied onto the clipboard which can then be pasted into your blog editor of choice (I like Windows Live Writer).

The nice thing about CopySourceAsHtml is that the code on your blog looks exactly like it would in Visual Studio, right down to highlighting class names.

CopySourceAsHtml also works on any file in Visual Studio be it C#, VB.NET, XML, HTML, XAML or JavaScript. The version of C# or VB.NET also doesn't matter.

Finally CopySourceAsHtml works with almost any blog. Since it is just HTML there is nothing that needs to be done on the server.

CopySourceAsHtml is free and can be download here. To get it up and running with VS2008 follow these instructions (its not hard).

-

Slice - Collection Slicing Extension Method

I really like Python collection slicing. It is easy to use, flexible and forgiving. Using C# 3.0 extension methods I have recreate collection slicing in .NET.

List<int> initial = new List<int> { 1, 2, 3, 4, 5 };

var sliced = initial.Slice(1, 4);// 2, 3, 4sliced = initial.Slice(0, 5, 2);

// 1, 3, 5sliced = initial.Slice(null, null, -2);

// 5, 3, 1sliced = initial.Slice(null, -1);// 1, 2, 3, 4sliced = initial.Slice(-2, -1);

// 4Just specify the start index, stop index and a step and the method will create a new collection with all the valid values from the initial collection. Slice will never throw an IndexOutOfRange exception. If there are no values within the specified indexes then an empty collection is returned.

Start, stop and step can all be negative. A negative start or stop will begin from the end of the collection (e.g. -1 becomes source.Count - 1) and a negative step will reverse the new collection.

public static IEnumerable<T> Slice<T>(this IEnumerable<T> source, int? start)

{return Slice<T>(source, start, null, null);

}

public static IEnumerable<T> Slice<T>(this IEnumerable<T> source, int? start, int? stop)

{return Slice<T>(source, start, stop, null);

}

public static IEnumerable<T> Slice<T>(this IEnumerable<T> source, int? start, int? stop, int? step)

{if (source == null) throw new ArgumentNullException("source");

if (step == 0) throw new ArgumentException("Step cannot be zero.", "step");

IList<T> sourceCollection = source as IList<T>;

if (sourceCollection == null) source = new List<T>(source);

// nothing to sliceif (sourceCollection.Count == 0) yield break;

// set defaults for null argumentsint stepCount = step ?? 1;int startIndex = start ?? ((stepCount > 0) ? 0 : sourceCollection.Count - 1);int stopIndex = stop ?? ((stepCount > 0) ? sourceCollection.Count : -1);// start from the end of the list if start is negitiveif (start < 0) startIndex = sourceCollection.Count + startIndex;// end from the start of the list if stop is negitiveif (stop < 0) stopIndex = sourceCollection.Count + stopIndex;// ensure indexes keep within collection boundsstartIndex = Math.Max(startIndex, (stepCount > 0) ? 0 : int.MinValue);

startIndex = Math.Min(startIndex, (stepCount > 0) ? sourceCollection.Count : sourceCollection.Count - 1);stopIndex = Math.Max(stopIndex, -1);stopIndex = Math.Min(stopIndex, sourceCollection.Count);for (int i = startIndex; (stepCount > 0) ? i < stopIndex : i > stopIndex; i += stepCount)

{yield return sourceCollection[i];

}

yield break;

}

-

Celebrating the Quick Hack

Sometimes you want something working now. Code that is understandable, maintainable, extensible and performant has its place but there are times you need a piece of functionality ASAP. Enter the quick hack.

Sometimes you want something working now. Code that is understandable, maintainable, extensible and performant has its place but there are times you need a piece of functionality ASAP. Enter the quick hack.Below is a quick hack I did recently for a temporary dev tool. This isn't a post showing off a piece of code's elegancy or simplicity. Instead while it functioned perfectly and took no time to do, this code was so judged so unreadable and it performed so badly that I believe the hack deserves some kind recognition anyway.

The Task

Take a utility console application and turn it into a Windows Forms app that has a minimal UI.

The Problem

The console application writes a lot of debug data to the console. I want that information to be visible in the Winform app and so need to display the console output in a text area.

The Quick Hack

Sure you could abstract away writing to the console through a more generic logging interface and then replace every call to Console.Write, but that is far too smart and time consuming if you ask me [;)]

Instead in the spirit of Quick Hack I chose to set my own TextWriter to Console.Out and have it write to the Winforms textbox.

public class DelegateTextWriter : TextWriter

{private readonly Encoding _encoding;

private readonly Action<char> _writeAction;

public DelegateTextWriter(Encoding encoding, Action<char> writeAction)

{_encoding = encoding;

_writeAction = writeAction;

}

public override Encoding Encoding

{get { return _encoding; }

}

public override void Write(char value)

{_writeAction(value);

}

}

The TextWriter that will be set to Console.Out. Nothing too outrageous so far...

Console.SetOut(new DelegateTextWriter(Console.OutputEncoding, c => {

Invoke(new Action(() => ConsoleOutputTextBox.Text += c.ToString()));

}));

Haha! How deliciously unreadable.

For the curious what is going on there is a lambda function is being created which takes a char. Because Console.Write is being called from a non UI thread we need to use Control.Invoke. The function creates another lambda function which adds the character to the end of the Winforms textbox. That lambda is called then passed to Invoke to be run.

The icing on the cake is not only is it hard to understand at a glance but its also incredibly slow. For every single character being written to the console the app will:

- Call two delegates

- Call Control.Invoke

- Turn the character being written into a string

- Create a new string of the entire contents of the textbox so far plus the new character

No wonder it maxed out a CPU core and output got written to the textbox like a type writer.

After showing off how awesomely bad this hack was I did spend a couple of minutes making it usable. Splitting the code up and adding comments made it somewhat understandable and to fix performance all that was needed was an override for TextWriter.Write(char[]) and replacing the string concatenation with TextBox.AppendText.

If you have any quick hacks of your own that you are proud of (both elegant and horrible) I would love to hear them.

-

Json.NET 2.0 Released

The final version of Json.NET 2.0 is now available for download. The notable new feature(?) of this release is Json.NET now includes documentation. I know, I am just as shocked as you are!

The final version of Json.NET 2.0 is now available for download. The notable new feature(?) of this release is Json.NET now includes documentation. I know, I am just as shocked as you are!Json.NET - Quick Starts & API Documentation

Overall I am really proud of how Json.NET 2.0 has turned out. From a usability perspective the new LINQ to JSON API makes manually constructing and reading JSON considerably easier compared to the old JsonReader/JsonWriter approach. The JsonSerializer has also seen big user improvements. New settings and attributes allow a developer to have far greater control over the serialization/deserialization process.

Architecturally I am also pleased with Json.NET 2.0. LINQ to JSON builds on top of the existing Json.NET APIs and there was a lot of reuse between the different Json.NET components: JsonReader/Writer, JsonSerializer and the LINQ to JSON objects. Features that I thought might a lot of time and code to implement turned out to be quite simple when I realised I could reuse a class from somewhere else.

Changes

Here is a complete list of what has changed since Json.NET 1.3:

Json.NET 2.0 Final

- New feature - Documentation.chm included in download. Contains complete API documentation and quick start guides to Json.NET.

Json.NET 2.0 Beta 3

- New feature - Added JsonObjectAttribute with ability to opt-in or opt-out members by default.

- New feature - Added Skip() method to JsonReader.

- New feature - Added ObjectCreationHandling property to JsonSerializer.

- Change - LOTS of little fixes and changes to the LINQ to JSON objects.

- Change - CultureInfo is specified across Json.NET when converting objects to and from text.

- Change - Improved behaviour of JsonReader.Depth property.

- Change - GetSerializableMembers on JsonSerializer is now virtual.

- Fix - Floating point numbers now use ToString("r") to avoid an overflow error when serializing and deserializing a boundary value.

- Fix - JsonSerializer now correctly serializes and deserializes DBNull.

- Fix - JsonSerializer no longer errors when skipping a multi-token JSON structure.

- Fix - Clone a JToken if it already has a parent and is being added as a child to a new token

Json.NET 2.0 Beta 2

- New feature - Added FromObject to JObject, JArray for creating LINQ to JSON objects from regular .NET objects.

- New feature - Added support for deserializing to an anonymous type with the DeserializeAnonymousType method.

- New feature - Support for reading, writing and serializing the new DateTimeOffset type.

- New feature - Added IsoDateTimeConverter class. Converts DateTimes and DateTimeOffsets to and from the ISO 8601 format.

- New feature - Added JavaScriptDateTimeConverter class. Converts DateTimes and DateTimeOffsets to and from a JavaScript date constructor.

- New feature - XmlNodeConverter handles serializing and deserializing JavaScript constructors.

- New feature - Ability to force XmlNodeConverter to write a value in an array. Logic is controlled by an attribute in the XML, json:Array="true".

- New feature - JsonSerializer supports serializing to and from LINQ to JSON objects.

- New feature - Added Depth property to JsonReader.

- New feature - Added JsonTextReader/JsonNodeReader and JsonTextWriter/JsonNodeWriter.

- Change - More concise LINQ to JSON querying API.

- Change - JsonReader and JsonWriter are now abstract.

- Change - Dates are now serialized in a JSON complient manner, similar to ASP.NET's JavaScriptSerializer or WCF's DataContractJsonSerializer.

- Change - JsonSerializer will serialize null rather than throwing an exception.

- Change - The WriteTo method on LINQ to JSON objects now support JsonConverters.

- Fix - JsonTextReader correctly parses NaN, PositiveInfinity and NegativeInfinity constants.

- Fix - JsonSerializer properly serializes IEnumerable objects.

- Removed - AspNetAjaxDateTimeConverter. Format is no longer used by ASP.NET AJAX.

- Removed - JavaScriptObject, JavaScriptArray, JavaScriptConstructor. Replaced by LINQ to JSON objects.

Json.NET 2.0 Beta 1

- New feature - LINQ to JSON!

- New feature - Ability to specify how JsonSerializer handles null values when serializing/deserializing.

- New feature - Ability to specify how JsonSerializer handles missing values when deserializing.

- Change - Improved support for reading and writing JavaScript constructors.

- Change - A new JsonWriter can now write additional tokens without erroring.

- Bug fix - JsonSerializer handles deserializing dictionaries where the key isn't a string.

- Bug fix - JsonReader now correctly parses hex character codes.

Json.NET 2.0 Download - Json.NET source code, binaries and documentation

-

Thoughts on Twitter

I like Twitter. The idea behind it is you post a short message about what you are up to and it then appears to anyone who has subscribed to your updates. It takes my favourite feature of Facebook, status updates, and then removes all the other crud.

I like Twitter. The idea behind it is you post a short message about what you are up to and it then appears to anyone who has subscribed to your updates. It takes my favourite feature of Facebook, status updates, and then removes all the other crud.The nice thing about updates (or "tweets" if you want to look like douche) is that they are passive. They don't fill up an inbox like email so you can let others know about something you are up to that is interesting, but is perhaps not worth spamming your entire address book with, e.g. "Working on my first MVC application. Looking at getting started guides." It is a great way to start conversations that never would have happened otherwise.

Twitter is a nifty service but it ia not perfect. The Twitter website is flaky (perhaps not the best showcase for Ruby on Rails) and the web UI doesn't handle subscribing to a large number of people particularly well. I think at the moment the place where Twitter shines is with small communities.

p.s. I have added my Twitter status to the blog (look to the right). A great example of a useful JSON API [:)]

-

Mindscape WPF Elements

The guys at Mindscape have released another WPF product call WPF Elements. This time it is a set of WPF controls aimed primarily at line of business apps: masked textboxes, numeric textboxes, date time pickers, a multi-column treeview, and more.

I have used a product of theirs call Lightspeed on a number of projects and Mindscape has always been really prompt when responding to queries. If you are developing business WPF applications then Elements is well worth checking out.

-

Json.NET 2.0 Beta 3 - Turkey Test Approved

This post on handling different cultures in .NET really caught my imagination a couple of months back. Today I can proudly say that Json.NET passes the Turkey Test!

This post on handling different cultures in .NET really caught my imagination a couple of months back. Today I can proudly say that Json.NET passes the Turkey Test!Aside from poultry, the main focus of this Json.NET release has been fixing all the little issues in the LINQ to JSON objects (JObject, JArray, JValue, etc). NCover has been a great help highlighting methods that didn't have any tests over the top of them. Test coverage has improved significantly in this release.

The JsonSerializer has also seen some changes. All reported bugs have been fixed and a couple of new features have been added. The most significant new feature is the JsonObjectAttribute. Placed on a class the JsonObjectAttribute lets you control whether properties are serialized by default (e.g. XmlSerializer behaviour) or whether you must explicitly include each property you want serialized with the JsonPropertyAttribute (e.g. WCF behaviour).

[JsonObject(MemberSerialization.OptIn)]

public class Person

{[JsonProperty]public string Name { get; set; }

[JsonProperty]public DateTime BirthDate { get; set; }

// not serializedpublic string Department { get; set; }

}

The default behaviour for classes without the attribute remains properties are serialized by default (opt-out).

Changes

- New feature - Added JsonObjectAttribute with ability to opt-in or opt-out members by default.

- New feature - Added Skip() method to JsonReader.

- New feature - Added ObjectCreationHandling property to JsonSerializer.

- Change - LOTS of little fixes and changes to the LINQ to JSON objects.

- Change - CultureInfo is specified across Json.NET when converting objects to and from text.

- Change - Improved behaviour of JsonReader.Depth property.

- Change - GetSerializableMembers on JsonSerializer is now virtual.

- Fix - Floating point numbers now use ToString("r") to avoid an overflow error when serializing and deserializing a boundary value.

- Fix - JsonSerializer now correctly serializes and deserializes DBNull.

- Fix - JsonSerializer no longer errors when skipping a multi-token JSON structure.

- Fix - Clone a JToken if it already has a parent and is being added as a child to a new token

Json.NET 2.0 Beta 3 Download - Json.NET source code and binaries

-

FormatWith 2.0 - String formatting with named variables

The problem with string formatting is that {0}, {1}, {2}, etc, aren't very descriptive. Figuring out what the numbers represent means either deducing their values from their usage context in the string or examining the arguments passed to String.Format. Things become more difficult when you are working with a format string stored in an external resource as the second option no longer available.

FormatWith 2.0

I have updated the original FormatWith with an overload that takes a single argument and allows the use of property names in the format string.

MembershipUser user = Membership.GetUser();

Status.Text = "{UserName} last logged in at {LastLoginDate}".FormatWith(user);It also works with anonymous types:

"{CurrentTime} - {ProcessName}".FormatWith(new { CurrentTime = DateTime.Now, ProcessName = p.ProcessName });

And even allows for sub-properties and indexes:

var student = new

{Name = "John",Email = "john@roffle.edu",BirthDate = new DateTime(1983, 3, 20),

Results = new[]{new { Name = "COMP101", Grade = 10 },

new { Name = "ECON101", Grade = 9 }

}

};

Console.WriteLine("Top result for {Name} was {Results[0].Name}".FormatWith(student));

// "Top result for John was COMP101"There isn't much to the code itself. Most of the heavy lifting is done in a regular expression and the .NET DataBinder class.

To start with a regular expression picks all the {Property} blocks out of the string. The the property expression inside the brackets is then evaluated using the DataBinder class on the object argument to get the property value. That value is then put into a list of values and the {Property} block is replaced in the string with the index of the value in the list, e.g. {1}. Finally the rewritten string, which now looks like any other format string, and the list of evaluated values are passed to String.Format.

public static string FormatWith(this string format, object source)

{return FormatWith(format, null, source);

}

public static string FormatWith(this string format, IFormatProvider provider, object source)

{if (format == null)

throw new ArgumentNullException("format");

Regex r = new Regex(@"(?<start>\{)+(?<property>[\w\.\[\]]+)(?<format>:[^}]+)?(?<end>\})+",

RegexOptions.CultureInvariant | RegexOptions.IgnoreCase);

List<object> values = new List<object>();

string rewrittenFormat = r.Replace(format, delegate(Match m)

{Group startGroup = m.Groups["start"];

Group propertyGroup = m.Groups["property"];

Group formatGroup = m.Groups["format"];

Group endGroup = m.Groups["end"];

values.Add((propertyGroup.Value == "0")? source

: DataBinder.Eval(source, propertyGroup.Value));return new string('{', startGroup.Captures.Count) + (values.Count - 1) + formatGroup.Value

+ new string('}', endGroup.Captures.Count);

});

return string.Format(provider, rewrittenFormat, values.ToArray());

}

-

FormatWith - String.Format Extension Method

I have a love/hate relationship with String.Format.

On one hand it makes strings easier to read and they can easily be put in an external resource.

On the other hand every bloody time I want to use String.Format, I only realize when I am already halfway through writing the string. I find jumping back to the start to add String.Format is extremely annoying and that it breaks me out of my current train of thought.

FormatWith

FormatWith is an extension method that wraps String.Format. It is the first extension method I wrote and is probably the one I have used the most.

Logger.Write("CheckAccess result for {0} with privilege {1} is {2}.".FormatWith(userId, privilege, result));

An extension method with the same name as a static method on the class causes the C# compiler to get confused, which is the reason why I have called the method FormatWith rather than Format.

public static string FormatWith(this string format, params object[] args)

{if (format == null)

throw new ArgumentNullException("format");

return string.Format(format, args);

}

public static string FormatWith(this string format, IFormatProvider provider, params object[] args)

{if (format == null)

throw new ArgumentNullException("format");

return string.Format(provider, format, args);

}

Update:

You may also be interested in FormatWith 2.0.

-

Blog Redesign

If you regularly visit my blog (subscribe already!) you may have noticed the design has been updated.

Here is a comparison between the old and the new. Click for a bigger image:

I threw out the old design and started with a template called EliteCircle, which I have made some fairly substantial updates to. I was a little hesitant about changing the CSS too much, the last time I had done any serious CSS work was over two years ago, but everything ending up going smoothly.

Changes

The previous look was simple which I liked, but it was gradually getting overrun by tag and archive links. Tags are now displayed in a screen real estate efficient cloud, and archive links have been moved to a separate page.

The design is slightly wider. The previous design's minimum resolution was 800x600, which according to Google Analytics is used by just 0.3% of visitors these days. The new minimum resolution is now 1024x768. With the wider design I have increased the content text to match.

In the new design I have also removed a lot of what I think is unnecessary information from the blog. The focus of a blog should be the content. Details like what categories a post is in or exactly what time a comment was made aren't of interested to the average visitor and have been removed to reduce screen clutter.

Overall I am really happy with the new design and I think it is a big improvement over what was here before.

Obligatory

As always with a redesign, if you spot anything weird or broken, or just think something looks bad, let me know. Thanks!

-

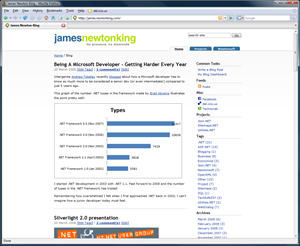

Being A Microsoft Developer - Getting Harder Every Year

Intergenite Andrew Tokeley recently blogged about how a Microsoft developer has to know so much more to be considered a senior dev (or even intermediate!) compared to just 5 years ago.

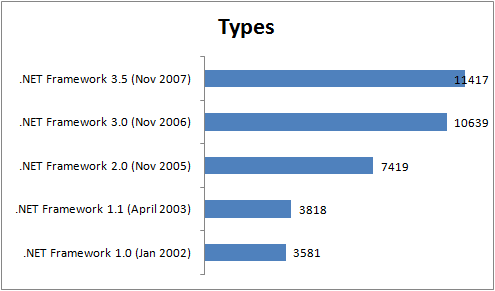

This graph of the number .NET types in the framework made by Brad Abrams illustrates the point pretty well:

I started .NET development in 2003 with .NET 1.1. Fast forward to 2008 and the number of types in the .NET framework has tripled!

Remembering how overwhelmed I felt when I first approached .NET back in 2003, I can't imagine how a junior developer today must feel.

-

Silverlight 2.0 presentation

Thanks to everyone who came along to the Wellington Dot User Group meeting this evening for my presentation on Silverlight 2.0. We were close to standing room only, which is an indicator of the great interested within the local .NET community around Silverlight.

If you are interested in developing with Silverlight, then http://www.silverlight.net is the one stop shop to help you get started. Silverlight.net is the official Microsoft Silverlight website and it has the Silverlight runtime, tools, examples and an active community if you are in need of assistance.

Feedback on the presentation has been great and I hope everyone who attended feels ready to jump into the world of Silverlight development!

-

TextGlow Coverage Roundup

- www.textglow.com - The TextGlow press release displayed in TextGlow (+1 for recursiveness!)

Blog posts

- Intergen Blog - Announcing TextGlow - a world first from Intergen, NZ

- James Newton-King - Silverlight 2.0 + OOXML = TextGlow

- Chris Auld - Silverlight 2.0 + Office Open XML == TextGlow

- Tim Sneath - A Great Early Silverlight 2 Showcase: TextGlow

- Gray Knowlton - Silverlight viewer for Open XML

- Oliver Bell - New Zealand’s Intergen Deliver OpenXML Viewing Using Silverlight

- Gavin Barron - Intergenite delivers in browser viewer for docx

Videos

- YouTube - Intergen TextGlow: Open XML Document (DOCX) in Silverlight

- VisitMIX - Straight from Word to Web without Office: Intergen

Radio (!?!)

- Radio NZ - This Way Up

NZ Media

- Computerworld - Intergen launches new software in Las Vegas

- Computerworld - MIX08 'fizzes' over Intergen applications

- InfoTech - Silverlight shed on Word docs

- Otago Daily Times - NZ company launches software in Las Vegas

- Geekzone - Intergen releases Office Open XML web application

(plus lots lots more from around the world)

-

Silverlight 2.0 + OOXML = TextGlow

Over the last couple of months I have had the opportunity to work on an exciting project called TextGlow.

Over the last couple of months I have had the opportunity to work on an exciting project called TextGlow.TextGlow is a Silverlight 2.0 application for viewing Word 2007 documents on the Internet. With TextGlow it is possible to instantly view a document within a web page, with no Microsoft Office applications installed. Since Silverlight is cross platform the user doesn't even have to be running Windows!

How it Works

The first step to TextGlow displaying a document is going out and downloading it. TextGlow is a Silverlight application and runs completely client side, and that means it needs a local copy to work with. This process happens automatically using the new WebClient class in Silverlight 2.0. Like you would expect from a class called WebClient it provides simple web request, but what makes WebClient great are the download progress events it provides. Making a progress bar to show the status of a download is a snap.

The first step to TextGlow displaying a document is going out and downloading it. TextGlow is a Silverlight application and runs completely client side, and that means it needs a local copy to work with. This process happens automatically using the new WebClient class in Silverlight 2.0. Like you would expect from a class called WebClient it provides simple web request, but what makes WebClient great are the download progress events it provides. Making a progress bar to show the status of a download is a snap.Next the various files inside the document are extracted. An OOXML document (docx) is actually just a zip file containing XML and other resource files, such as any images that might appear in the document. Fortunately Silverlight has built in zip file support using the Application.GetResourceStream method.

Now that TextGlow has the OOXML it parses the XML and builds an object model of the document. To parse the XML I used LINQ to XML, which is new in Silverlight 2.0. After an initial learning curve (mainly suppressing the memory of many years of working with horrible DOM APIs [;)]), I found LINQ to XML to be wonderful to work with. I blogged about a couple of the cool features I discovered while working with LINQ to XML along the way.

Now that TextGlow has the OOXML it parses the XML and builds an object model of the document. To parse the XML I used LINQ to XML, which is new in Silverlight 2.0. After an initial learning curve (mainly suppressing the memory of many years of working with horrible DOM APIs [;)]), I found LINQ to XML to be wonderful to work with. I blogged about a couple of the cool features I discovered while working with LINQ to XML along the way.Using the object model of the document TextGlow then creates WPF controls to display the content. Writing custom Silverlight WPF controls to render the content was definitely the hardest aspect of the project. When I started work on it with the Silverlight 1.1 Alpha there were no layout controls like StackPanel or Grid. Everything was absolutely position and I had to write my own flow layout controls. Paragraphs and complex flowing text was also surprisingly difficult to get right in Silverlight. Fortunatly Silverlight 2.0 includes controls like these and makes writing an application like TextGlow much easier.

The final step in display a document is simply WPF plying its magic and rendering the WPF controls. The user now has the Word 2007 document render on their screen using nothing but the power of Silverlight.

Thanks to...

TextGlow has be a great application to work on and it was awesome being able to design and build my own idea.

Thanks to Nas for mocking up the UI. If the UI was left up to me TextGlow would make Notepad look good [;)]

Big thanks to Chris Auld for providing a launch pad for the idea and then providing regular injections of enthusiasm into the project.

If you're interested in seeing more of TextGlow, visit www.textglow.com

Update:

-

The Paradox of Choice

I haven't read The Paradox of Choice

but this talk by the author makes me want to.

Interesting!

-

Json.NET 2.0 Beta 2

I am really happy with how Json.NET 2.0 and LINQ to JSON is turning out. This release offers big improvements both in the syntax for writing JSON and querying JSON. I have also finally put in some real effort into fixing up some the niggling issues from previous versions of Json.NET.

Creating JSON Improvements

One of the suggestions I received from the first 2.0 beta was creating JSON objects from anonymous objects.

With LINQ to JSON it is now possible to do this:

JObject o = JObject.FromObject(new

{channel = new{title = "James Newton-King",link = "http://james.newtonking.com",description = "James Newton-King's blog.",item =

from p in posts

orderby p.Titleselect new

{title = p.Title,

description = p.Description,

link = p.Link,

category = p.Categories

}

}

});

Pretty neat. Using regular .NET objects is also possible.

The way it works is FromObject is just a wrapper over the JsonSerializer class and reuses its logic. The difference compared to regular serialization is that the serializer is writing to a JsonTokenWriter instead of a JsonTextWriter. These classes are both new in this release and inherit from the now abstract JsonWriter.

I think it is really cool the way LINQ to JSON, JsonWriter and JsonSerializer dovetail together here. Once JsonWriter had been split apart and I could plug in different JsonWriters into JsonSerializer depending upon what output I wanted, adding this feature took no time at all [:)]

Querying JSON Improvements

I felt the querying API in beta 1 was too wordy and explicit. Beta 2's is more concise and it should hopefully be easier to use.

Before:

var categories =from c in rss.PropertyValue<JObject>("channel")

.PropertyValue<JArray>("item")

.Children<JObject>().PropertyValues<JArray>("category")

.Children<string>()group c by c into g

orderby g.Count() descending

select new { Category = g.Key, Count = g.Count() };

After:

var categories =from c in rss["channel"]["item"].Children()["category"].Values<string>()

group c by c into g

orderby g.Count() descending

select new { Category = g.Key, Count = g.Count() };

I am still putting some thought into this area but I think it is a definite improvement.

Deserializing Anonymous Types

It is now possible to deserialize directly to an anonymous type with the new DeserializeAnonymousType method. A new method was required because the only way I found to get access to type information about an anonymous type is to have is as an argument.

public static T DeserializeAnonymousType<T>(string value, T anonymousTypeObject)

{return DeserializeObject<T>(value);}

If there is a better way let me know!

Dates

Dates in JSON are hard.

The first issue is that there is no literal syntax for dates in the JSON spec. You either have to invent your own or break from the spec and use the JavaScript Date constructor, e.g. new Date(976918263055).

Historically Json.NET defaulted to using the JS Date constructor but in this release the default format has changed to using the approach taken by Microsoft. The "\/Date(1198908717056+1200)\/" format is conformant with the JSON spec, allows timezone information to be included with the DateTime and will make Json.NET more compatible with Microsoft's JSON APIs. Developers that want to continue to use the JavaScript Date constructor format of Json.NET 1.x, a JavaScriptDateTimeConverter has been added.

The second issue with dates in JSON (and also in .NET it turns out!) are timezones. I have never written an application that needed to worry about timezones before and I have learn't a lot of the past couple of days [:)]

Previously Json.NET was returning local ticks when it really should have been using UTC ticks. That has now been corrected.

Other miscellaneous Date changes in this release are another new date converter, IsoDateTimeConverter, which reads to and from the ISO 8601 standard (1997-07-16T19:20:30+01:00) and support for reading, writing and serializing the new DateTimeOffset struct.

Changes

All changes since the last release:

- New feature - Added FromObject to JObject, JArray for creating LINQ to JSON objects from regular .NET objects.

- New feature - Added support for deserializing to an anonymous type with the DeserializeAnonymousType method.

- New feature - Support for reading, writing and serializing the new DateTimeOffset type.

- New feature - Added IsoDateTimeConverter class. Converts DateTimes and DateTimeOffsets to and from the ISO 8601 format.

- New feature - Added JavaScriptDateTimeConverter class. Converts DateTimes and DateTimeOffsets to and from a JavaScript date constructor.

- New feature - XmlNodeConverter handles serializing and deserializing JavaScript constructors.

- New feature - Ability to force XmlNodeConverter to write a value in an array. Logic is controlled by an attribute in the XML, json:Array="true".

- New feature - JsonSerializer supports serializing to and from LINQ to JSON objects.

- New feature - Added Depth property to JsonReader.

- New feature - Added JsonTextReader/JsonNodeReader and JsonTextWriter/JsonNodeWriter.

- Change - More concise LINQ to JSON querying API.

- Change - JsonReader and JsonWriter are now abstract.

- Change - Dates are now serialized in a JSON complient manner, similar to ASP.NET's JavaScriptSerializer or WCF's DataContractJsonSerializer.

- Change - JsonSerializer will serialize null rather than throwing an exception.

- Change - The WriteTo method on LINQ to JSON objects now support JsonConverters.

- Fix - JsonTextReader correctly parses NaN, PositiveInfinity and NegativeInfinity constants.

- Fix - JsonSerializer properly serializes IEnumerable objects.

- Removed - AspNetAjaxDateTimeConverter. Format is no longer used by ASP.NET AJAX.

- Removed - JavaScriptObject, JavaScriptArray, JavaScriptConstructor. Replaced by LINQ to JSON objects.

Json.NET 2.0 Beta 2 Download - Json.NET source code and binaries

-

LINQ to JSON beta

I like LINQ. A lot. For the past few months I have been fortunate to work on a .NET 3.5 project and I have been making heavily use of LINQ to XML. While using XLINQ it occurred to me that a similar API for working with JSON objects, which share a lot of similarities with XML, would be useful in many situations.

I like LINQ. A lot. For the past few months I have been fortunate to work on a .NET 3.5 project and I have been making heavily use of LINQ to XML. While using XLINQ it occurred to me that a similar API for working with JSON objects, which share a lot of similarities with XML, would be useful in many situations.This beta is a very rough early preview of LINQ to JSON. It was literally written in a weekend [:)] The aim is to get feedback and ideas on how the API could be improved.

What is LINQ to JSON

LINQ to JSON isn't a LINQ provider but rather an API for working with JSON objects. The API has been designed with LINQ in mind to enable the quick creation and querying of JSON objects.

LINQ to JSON is an addition to Json.NET. It sits under the Newtonsoft.Json.Linq namespace.

Creating JSON

Creating JSON using LINQ to JSON can be done in a much more declarative manner than was previously possible.

List<Post> posts = GetPosts();

JObject rss =new JObject(

new JProperty("channel",

new JObject(

new JProperty("title", "James Newton-King"),

new JProperty("link", "http://james.newtonking.com"),

new JProperty("description", "James Newton-King's blog."),

new JProperty("item",

new JArray(

from p in posts

orderby p.Titleselect new JObject(

new JProperty("title", p.Title),

new JProperty("description", p.Description),

new JProperty("link", p.Link),

new JProperty("category",

new JArray(

from c in p.Categories

select new JValue(c)))))))));

Console.WriteLine(rss.ToString());//{// "channel": {// "title": "James Newton-King",// "link": "http://james.newtonking.com",// "description": "James Newton-King's blog.",// "item": [// {// "title": "Json.NET 1.3 + New license + Now on CodePlex",// "description": "Annoucing the release of Json.NET 1.3, the MIT license and the source being available on CodePlex",// "link": "http://james.newtonking.com/projects/json-net.aspx",// "category": [// "Json.NET",// "CodePlex"// ]// },// {// "title": "LINQ to JSON beta",// "description": "Annoucing LINQ to JSON",// "link": "http://james.newtonking.com/projects/json-net.aspx",// "category": [// "Json.NET",// "LINQ"// ]// }// ]// }//}Querying JSON

LINQ to JSON is designed with LINQ querying in mind. These examples use the RSS JSON object created previously.

Getting the post titles:

var postTitles =from p in rss.PropertyValue<JObject>("channel")

.PropertyValue<JArray>("item")

.Children<JObject>()select p.PropertyValue<string>("title");

foreach (var item in postTitles)

{Console.WriteLine(item);}

//LINQ to JSON beta//Json.NET 1.3 + New license + Now on CodePlexGetting all the feed categories and a count of how often they are used:

var categories =from c in rss.PropertyValue<JObject>("channel")

.PropertyValue<JArray>("item")

.Children<JObject>().PropertyValues<JArray>("category")

.Children<string>()group c by c into g

orderby g.Count() descending

select new { Category = g.Key, Count = g.Count() };

foreach (var c in categories)

{Console.WriteLine(c.Category + " - Count: " + c.Count);

}

//Json.NET - Count: 2//LINQ - Count: 1//CodePlex - Count: 1Feel free to make suggestions and leave comments. I am still experimenting with different ways of working with JSON objects and their values.

LINQ to JSON - Json.NET 2.0 Beta - Json.NET source code and binaries

-

DotNetKicks.com

For anyone who hasn't heard about this site yet, DotNetKicks.com is a community based news website. Basically it is a lot like Digg in that users submit and vote on articles, but unlike Digg it is focused exclusively on .NET news.

For anyone who hasn't heard about this site yet, DotNetKicks.com is a community based news website. Basically it is a lot like Digg in that users submit and vote on articles, but unlike Digg it is focused exclusively on .NET news.Because the readers of DotNetKicks.com are all .NET developers, and they are the people that vote on what is important, you can be sure that the quality of articles that appears will always be high. Any important news or useful new tool will be sure to find its way onto the front page. You can keep up with .NET without being subscribed to a 100 different blogs

If you have your own blog DotNetKicks.com can also be a great way to grow your readership. As a member you can submit your own blog posts for users to vote on. If the users find it interesting and "kick it" it will move onto the front page. A whole new community will be visiting your blog.

* The HTML for this button is generated by DotNetKicks.com when you submit a story.

-

Why I changed my mind about Extension Methods

My initial impression of extension methods was that they would lead to confusing code.

I imagined other developers picking up a project that used extension methods and being confused as to why ToCapitalCase() was being called on a string or wondering where AddRange(IEnumerable<T>) on that collection came from. Wouldn't it just be better to keep ToCapitalCase and AddRange as they currently are on helper classes?

What changed my mind was actually using extension methods in anger myself. Using them firsthand I saw how extension methods could be used to improve both reading and writing C# code.

More readable

The nested nature of traditional static methods makes them counter-intuitive to read: You're essentially reading back to front. The static method call that comes first is actually what gets executed last.

Say you wanted to get some nicely formatted XML from a file for logging purposes:

string xml = XmlUtils.FormatXml(FileUtils.ReadAllText(PackageUtils.GetRelationshipFile(packageDirectory)));

Logger.Log(xml);Now compare the same code using extension methods: